On modern surfaces data processing and filling is done in a completely new manner. Once data processing was mainly done in a centralized part in a distant data center usually not so close to the generating devices of that data. Today, it is possible not traveling great distances to a centralized cloud although this is the case in traditional floundering of distance mediality from the preciousness of the unit source.

The edge brings additional computation as close to the devices and sensors generating data as possible. Real-time analytics, lower latency, and improved networking efficiency are mandatory in today’s digital landscape. From IoT devices to 5G networks, and integrated cities, to intelligent autonomous vehicles, and finally next-gen industrial-level automation, the edge is opening trends in high technology.

This guide goes in-depth: the very element of edge computing – definition, architecture, comparisons with cloud computing, examples of practical applications, and synergy with fog computing. Find out how this technology can improve processing capabilities, lower latency, and better manage resources in networks. All this is in for you: tech ready, industry, and IoT developer provided to give insight into the role edge computing plays in our digitally transforming future.

Table of Contents

Introduction to Edge Computing ✨

Definition of Edge Computing

Edge computing is a kind of distributed IT systems in which the processing takes place in close proximity to the data source and not only at the centralized cloud server. It deploys the computational resources at the “edge” of the network, near IoT devices, sensors, smartphones, or other endpoints, to provide fast data analysis, decision-making, and response times. This allows for the critical operations to occur in real time without the unnecessary wastes of latency and delay incurred from having to send data this far out to data centers.

In layperson terms, edge computing is the breaking of centralized data processing for efficiency and improved latency. The concept relies on the strategic placement of servers, gateways, or processors to perform tasks such as filtering, aggregation, or preliminary analysis of data before sending it onto broader cloud infrastructure. This becomes very necessary when time-critical decisions must be taken, for example, in autonomous vehicle navigation, industrial automation, or emergency response systems.

The spiking demands for edge computing are multipath in growth of connected devices and the consequent need for quick decisions on the information obtained from them. Under advancement in technology, the principles that govern this paradigm-in architecture, benefits, and real-world applications-become fundamental to gaining all its strength in modern applications.

The Importance of Edge Computing in Modern Technology

With the Internet of Things (IoT) and 5G networks rapidly taking shape, along with expanding smart devices, data quickly accumulates now at scales never before imagined. Legacy models of cloud computing, while quite robust, are hardly sufficient for processing vast data instantaneously. The high latencies, limitations of bandwidth, and privacy concerns require moving to local data handling solutions-with edge computing thereby emerging as one of the most relevant features in the modern technology world.

Critical for applications where milliseconds matter-such as autonomous driving, medical monitoring, or even industrial control systems-edge computing enables real-time analytics by processing data close to its source. Further, local data processing reduces the direct dependence on cloud infrastructure, resulting in reduced congestion and thus cost savings regarding data transfer and storage. Hence, the whole network system becomes more efficient, particularly in scenarios of remote or restricted bandwidth.

Edge computing also meets the privacy and security challenges by processing sensitive information in local environments rather than over a network-broadening the danger of breaches. Its contribution to reducing latencies becomes vital for the purposes of providing a seamless experience for users in areas as varied as augmented reality, live video streaming, and interactive gaming. In fact, edge computing has become increasingly important in a distributed architectural world where industries seek high resilience for operations.

Overview of Edge Computing Architecture

.It is an architecture in which various layers work together for efficient data processing, storage, and analysis. The basic building blocks are edge devices, such as sensors, cameras, smartphones, and industrial machines, that generate raw data. Then these devices are connected with edge nodes or gateways across local networks, where they perform filtering or aggregation or very initial analysis of data into raw data.

Beyond these edge nodes, one can find regional data centers or points for cloud integration that serve a wider processing, archival storage, and complex analytics. The architecture is designed to be scalable, flexible, and resilient in trust with distributed architecture to satisfy variable workload needs.

It provides a focus on local data storage for most accesses to useful information rapidly while limiting the need for constant communication with centralized data centers. With real-time analytics, edge computing systems can also support time-critical applications. It may also incorporate computational resources capable of implementing machine learning models and data processing algorithms locally further boosting the users’ adresponse.

Designs related to security protocols, network topology, and hardware specifications of edge computing devices will form part of the edge computing architecture. Modular architecture allows organizations to deploy them according to particular use cases: industrial automation, smart cities, health care, and so on.

Edge Computing vs Cloud Computing

Key Differences Between Edge and Cloud Computing

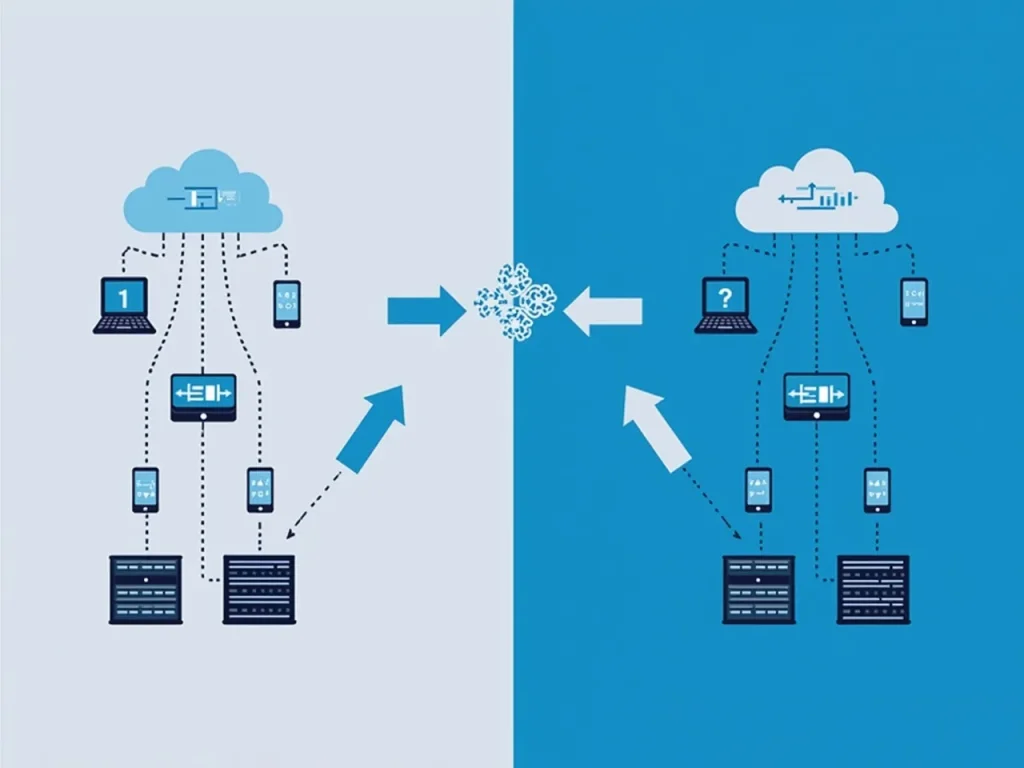

Edge and cloud computing are similar in having the same motivation-to process, store, and analyze data. However, somewhat significant differences can be seen with respect to architecture, proximity to data sources, and specific applications. Familiarity with these differences helps organizations know how to best optimize their infrastructure against their operational requirements.

Cloud computing has a centralized way of processing data-facilities, which carry out computing miles away from the end user or device. With its extreme flexibility of scale, storage capacity, and computational prowess, it is best for asynchronous processing, long-term data analysis, and resource-demanding applications. In contrast, edge computing decentralizes processes and pushes small-scale computing units close to data sources, enabling real-time analytics and prompt response.

A very Important distinction is that cloud computing latency minimizes the service level, with such latency being amplified by the traversing of possible other intervening factors. Cloud computing applications demand immediate insight, so there is the added latency due to cloud computing itself. Edge computing significantly decreases such latencies, as a consequence of doing the heavy lifting on local computation, resulting in reduced latency and fast decisions.

Cost implications and bandwidth handling are diverse. The charges therefore incurred, mainly with respect to data transmission and mainly cloud storage management, could run sky high in cloud environments where a large volume of data gets transferred. Considering that edge computing reduces costs by allowing the local processing of data and transmitting only that which is needed or summarized to the cloud, this makes for a useful idea with regard to network efficiency and local data storage. Nevertheless, these two models can oftentimes work in conjunction, and when they do, they complement the capabilities of the other.

Advantages of Edge Computing Over Cloud Computing

.The cloud platform matured enough and matured enough so much, but edge computing has its own specific peculiar advantages for certain situations. One is that it provides local processing capability so action can be taken in real time rather than depending on latency because of cloud response, as it shows where violations could leave serious implications, as in industries such as manufacturing, healthcare, and transportation.

One further advantage is the privacy and security that all material stored on local devices or secure edge nodes can limit and cover all attack surfaces to minimize risk for data breaches into private networks or public ones during transmission. The only transmission allowable is that relating to data strictly necessary, thus saving bandwidth and reducing the possible congestion and operational costs.

This application area also increases the resilience and reliability of the process due to continuous processing at the local site when any network goes down during a disruptive event. Such independence promotes a distributed architecture, thus improving fault tolerance and scalability in such systems. Proven by these benefits, edge computing indeed complements, although at times also replaces, cloud services in the entire digital ecosystem.

Cases Where Edge Computing Excels

Edge computing thrives in use cases with immediate decision-making and response needs. Autonomous vehicles do all their edge processing to interpret sensor data instantaneously for safe navigation; none depends on distant servers.

In industrial automation, these devices monitor machinery locally, checking for anomalies, giving alarms, or triggering shutdowns to prevent costly downtimes and safeguard construction operations. With edge computing, smart city infrastructure provides timely insights with minimal latencies on traffic management, surveillance, and environmental monitoring.

Health care applications, such as remote patient monitoring, are enabled to process sensitive health data at the edge for supporting instant alerts, reducing the reliance on cloud transmission in conformity with privacy regulations. Retail applications capitalize on edge computing to allow real-time inventory update, customized advertising, and customer engagement that cannot be sustained with latency delays.

Additionally, quick response time is one of those beauties that edge computing has extended to these developing technologies such as augmented reality, virtual reality, and streaming services, in which the user experience is affected by every millisecond. Hence, edge computing lay utmost importance across all realms discussed due-to its inherent capacity to decentralize, distribute computing load, and eliminate latencies.

Practical Applications of Edge Computing

.Evolving with the businesses, industries are adopting edge computing solutions for higher operational effectiveness and customer experience. For example, in manufacturing, factories have been increasingly fitted with edge devices to continuously monitor machinery performance, which are used locally to analyze sensor data in predicting failures, calendar maintenance proactively, and avoiding catastrophic failures.

In retail, stores utilize edge computing at the point where sales are done, for analysis of customers’ behavior, and the knowledge about how much inventory is made, thus quickly performing transactions but giving personalized shopping experiences. In agriculture, it becomes the sensor network in the fields that measure a whole lot of environmental parameters-soil moisture, temperature, humidity, and more, for example, all these.Process this data optimally as far as irrigation and fertilization schedules are concerned.

Transportation is a catchword in edge computing. It has to do with fleet operations management, traffic flow management, and autonomous vehicle systems.Well managed roads traffic cameras having edge computing now conveniently analyze congestion levels then accurately control traffic signal timings improving urban mobility.

Smart home appliances such as voice assistants and security cameras use edge computing to keep privy to the data processed by these devices and bring down the amount of sensitive data, otherwise moved by networks.

The Edge computing in Iot (IoT)

It has become a necessity for IoT devices that are mushrooming, ranging from wearable health monitors to industrial sensors. These IoT devices generate tons of data that need to be processed in almost real-time for meaningful insight generation.

Edge computing in IoT ecosystems provides filter mechanisms for incoming data such that it will pre-process and analyze the data, and then send it to the cloud. Thus doing this minimizes latency while saving bandwidth and enhances security by reducing data exposure during transmission. A smart thermostat, for example, detects temperature deviations and adjusts HVAC operations instantaneously at the edge without incurring delays or energy costs.

Edge computing also enables massive-edge AI, where machine learning models run directly on IoT devices or nearby gateways for predictive maintenance, anomaly detection, and smart decision-making without asking for help from the cloud.

For instance, keeping a distributed architecture in IoT networks is a guarantee for scalability and resilience, especially in remote areas and critical infrastructure, where continuous working is of utmost concern. With ongoing growths in the IoT sector, edge computing will always be the fulcrum of data management due to an unmanageable volume and speed.

Leveraging Edge Computing for Real-Time Analytics

Real-time analytics is a foundation feature offered by edge computing. Traditional industries can now utilize insights from real-time information to improve safety, increase efficiency, and enhance customer experience. Thus, traditional, reactive processes undergo a transformation to become proactive processes.

Take, for instance, healthcare, where wearable devices mainly work from within. They analyze the vital signs of patients and immediately notify them or their care providers upon the detection of any anomalies. Such analytics at the edge would include, for example, predictive maintenance in manufacturing, where sensors monitor the status of machines and forecast breaks before they happen, thus decreasing unplanned downtime and saving on maintenance costs.

Automatic vehicles are going to make decisions that are down to the splitting of a second – space from an obstacle, lane changes, etc. Streaming from media and entertainment uses edge servers to offer quality content while incurring minimal buffering delay for the user.

Organizations that employ real-time analytics will see faster data processing and better value derived from their insights while improving operational agility. Strategic decisions will thus be prompt to give such enterprises a competitive edge.

Technical Aspects of Edge Computing

Components of Edge Computing Architecture

The wing of edge computing architecture is made up of many components working together in accurate coordination for localized data processing. Some of them base extremely crafty edge devices-the sensors, cameras, cell phones, and industrial equipment. These devices usually incorporate some levels of processing for conducting early-stage data filtering and analysis.

At the beginning of these connections are edge nodes or gateways-their strong mini-servers or embedded systems-that collate data or conduct local analytics; manage device communication. Most often, gateways have some computational resource which can run machine learning models or complex algorithms for real-time analytics and decision-making.

Their geography is often supplemented by regional data and cloud-based platforms that provide additional temporary storage, advanced analytics, and long-term data management. The design follows a distributive architecture in which the data goes liquor among these-from device to gateway to cloud-, according to the time-delay processing requirements.

The other consideration is local data storage, which stores the data pertinent for time periods before and/or after to be accessed in case of a loss in the network. Security measures under each individual limb will maintain the integrity and confidentiality of data all throughout the processing pipeline.

A full architecture must reflect a certain symmetry among computational resources, cost, and scalability based on display applications such as smart cities or industry automation.

Overview of Edge Computing Devices

.Edge computing devices are collection of hardware that can be used in different spaces and for different workloads. This will include small embedded systems, high-powered servers, specialized sensors, and smart gateways.

For example, single-board computers can be Raspberry Pi or NVIDIA Jetson, which are primarily used for IoT applications. Low-power and engineered to ruggedize but have enough processing capability for local purge. Industrial-grade edge devices follow the rugged feature to allow communications under the most extreme environments.

Smart Sensors or Cameras possess micro-processing circuitry and connectivity modules for data processing: first process images for identification, movement, or environment detection, and send pack some of the small data packets to a nearby gateway or cloud server.

In short, it turned out that gateways are intermediaries collecting data from one or more sensors, preprocessing it, and transmitting it to several cloud platforms. Some gateways come with edge AI capabilities, which support inference using complex models directly in the device.

In fact, it is expected that modular emerging trends concerning edge devices with customized hardware in terms of their applications into the sectors of healthcare, manufacturing, transport, etc., will be expected to find a solution in the future. Thus, towards this end, the overall recognition and hence use of AI-enabled edge devices point to the need for a plethora of computational resources at the edge that is meant-to-houset applications that require real-time analytics and keep their data stored locally.

Integration of Edge Computing with 5G Technology

.The spectacular 5G has substantially enhanced Edge Computing. Ultra-high-speed low-latency networking enables distributed architecture leveraging edge devices and gateways.

The conjunction of edge computing and 5G allows instantaneous data transfer between devices and processing nodes, an indispensable feature for applications such as remote surgery, autonomous vehicles, and smart manufacturing. By further enabling every conceivable avenue to lessen latency and promote real-time data processing and decision-making in unfathomable scales, this joint infrastructure presents additional forms towards the realization of edge computing.

Network slicing of 5G provides a dedicated virtual network for edge utilization, optimized in respect of the network’s efficiency and reliability. In such scenarios, tasks related to critical data processing can be prioritized while real-time analytics assume the utmost importance.

5G enhances seamless communication between edge devices and central servers, thus driving cloud-embedded environments. The convergence accelerates the deployment of distributed architectures that enable industries to locate computational resources close to data sources as well as allow innovations including Edge AI and the orchestration of IoT.

Complementary Technologies: Fog Computing

Decoding Fog Computing and Its Connection to Edge Computing

Fog computing extends beyond edge computing principles; it provides a layered architecture to connect local edge devices to the centralized cloud servers. In this case, whereas edge computing emphasizes processing at or portable to devices, fog computing brings additional power by also allowing a pool of nearby servers (the fog nodes) closer to geographically located data sources than traditional data centers.

Such fog nodes serve as intermediaries by performing a substantial amount of processing, filtering, and analytics on the data, before it is relayed. This hierarchy thus leads to improved network efficacy, as lesser data passes through more bandwidth-crunching links towards the cloud, or better yet, reduces latencies by decentralizing processing even further.

In a nutshell, these are complementary forms of edge and fog computing: simple, immediate processing of thin data at the device end constitutes edge computing, while fog gathers and analyzes greater amounts of data from numerous edge nodes. Together they represent a very distributed architecture capable of supporting complex large-scale applications like smart cities-with much greater volumes of data and processing demands.

The two modalities depend on the latency, processing needs, and resources related to the application in question. Edging and fog design thus creates scalable, fault-tolerant, and efficient systems maximizing computational holding at every level.

Comparing Edge and Fog Computing Architectures

.The aim of both architectures is to improve data processing efficiency, but the ranges and sizes, as well as deployment, are different for both edge and fog computing. Edge computing is largely concerned with individual devices or nearby areas and low-latency processing at the data source. Fog computing introduces additional layers—fog nodes—that connect several edge devices for local processing and coordination.

Usually, edge devices are responsible for the initial filtering and pre-analytic processing, while more significant processing, data aggregation and real-time analytics for areas much broader than any specific site are done by fog nodes. This architectural hierarchy limits the amount of data sent to the cloud and maintains network efficiency.

Architecturally, edge computing will usually be very decentralized with processing executed across a range of devices, while fog computing has a clearer layered organization for balancing local and regional processing. These two models both highlight that distributed architecture and local data become storage, but fog computing expands capability, resilience, and strategy for data longer in complex environments.

Edge or fog can depend on the particular latency sensitivity, volume of data, or how complex a particular infrastructure might be. Most of the implementations can therefore be two-fold, where immediate reactions can rely more on the function of edge devices in a fog architecture boosted by local analytics.

Benefits of Using Fog Computing Alongside Edge Computing

.Fog computing complements edge computing, then, with a multitude of advantages in terms of large-scale, data-intensive applications, all these boils down to an important attribute—scalability in which fog nodes consolidate the data processing for multiple edge devices which do distributed architecture management much simpler in addition to reducing network congestion.

It improves fault tolerance; in case an edge device went down, the adjacent fog nodes could take over its role, delivering nonstop service. Such redundancy becomes a requirement in critical systems such as health monitoring or industrial automation, in which the desirable operation time is reliable.

By transferring the processing from more extensive tasks on edge devices to fog nodes, one decreases the computational demand at the level of the device and thus increases battery life, reducing hardware costs. Also, advanced analytics and machine learning analysis is facilitated by fog nodes through enabling much smarter real-time analytics across region datasets.

The security is quite much enhanced through its local data processing and eventually limits the transmission of sensitive information. In addition, this layered approach becomes easy in integrating the cloud since only the processed, relevant data reach centralized servers, thus optimizing bandwidth and storage costs.

In total, the effective combination of edge and fog computing lies in the hybrid-resilience, scalability, and efficiency ecosystem to respond to the operational demands of a variety of areas.

Performance Enhancements Through Edge Computing

Reducing Latency in Data Processing

Latency means the delay of data generation and processing, which is one of the most important features that influence system performance. Most applications making use of milliseconds depend on edge computing as the way of fighting this challenge-local processing of data-in a way that significantly reduces the time it takes to analyze and act upon data.

In conventional cloud architecture, incoming data can be latently travelled from hundreds of milliseconds up to seconds over networks before reaching a centralized data center. This latency may cause situations in time-bound applications such as autonomous vehicles or industrial robots that could eventually lead to unsafe situations or operational inefficiencies. Edge computing resources will bring the computation closer to the edge itself, thus enabling timely decisions based on immediate data analysis.

One thing I have realized is that the location of resource deployment, which is edge computing, and even the hardware optimization, the quality of the networks themselves, are important in latency reduction. However, with a recent trend in edge AI, running models directly on the devices redefines what edge computing can deliver. The more time passes, the more latency reduction is a strategic component that brings new things like real-time traffic management and health monitoring, as well as a responsive security system.

Besides that, reduced latency makes the user experience more rewarding, particularly in areas such as AR/VR applications, live streaming, and gaming, where delay affects the perceptions of quality and immersion. Apart from that, organizations that invest in edge computing will be in a better position against competition than others because they will be able to offer faster and more responsive services.

Network Efficiency and Local Data Storage

.The biggest advantage of edge computing is maximizing the efficiency of a network. If processing can occur at the edge, only relevant, summarized, or filtered data needs to be shipped to cloud servers, helping to ease network congestion and cut down on bandwidth. This is especially advantageous where connectivity may be limited or unreliable, as in cases with remote industrial sites, rural areas, or disaster zones.

Complementing this activity is local data storage, which allows edge devices to temporarily or permanently retain critical information. This means the ability to operate in an offline mode, cache data, and conduct historical analysis without an uninterrupted network connection. Whenever network connectivity is re-established, all data kept thus automatically synchronizes with the cloud and maintains its consistency and continuity.

An interesting insight is that local data storage fosters data sovereignty and privacy because sensitive information need not transit over potentially insecure networks. Faster response time becomes possible-the data does not get caught in transmission queues-which is crucial for real-time analytics scenarios such as health monitoring or industrial control.

Putting efficient storage solutions into practice on the edge must factor in hardware capabilities, data lifecycle policies, and security standards that disallow unauthorized access. All in all, if these two variables, namely network efficiencies and local data storage, stand as the cornerstones of resilient, scalable distributed architecture that maximizes computer resources precisely where they are needed most.

Distributed Architecture and Its Impact on Computational Resources

.Rather, it is the distributed nature of the architecture that forms the basis of edge computing. It consists of numerous processing nodes located at various locations. This model stands in stark contrast to a monolithic cloud infrastructure, thereby gleaming several strategic advantages.

The distributed architecture supports scaling—systems can grow organically by way of the addition of new edge devices or fog nodes without modifying entire setups. Furthermore, it aids fault tolerance: when one node fails, others can continue functioning, letting operations continue with minimal disruption. Lastly, it distributes the computational resources while at the same time balancing loads dynamically according to demand, optimizing both performance and energy consumption.

I see the distributed architecture, on the other hand, as a necessary enabler for organizations to create autonomous and resilient systems able to deal with local fluctuations and operational particulars. For these industrial environments, this implies that decision-makers at the local level can react quickly to changes, drastically reducing their dependence on any central authority or service in the cloud.

Challenges in such an architecture include maintaining data consistency and synchronization as well as security for data flowing between nodes. In spite of this, the rise of edge orchestration and containerization, together with emerging security protocols, are putting these challenges well within reach. In turn, distributed architecture improves utilization of computational resources, decreases latency, and heightens innovation across domains seeking smart, agile, and efficient operations.

Conclusion

Edge computing symbolizes a complete revolution in the way data is processed, analyzed, and utilized in an interconnected world. It decentralizes computing resources, placing them closer to data sources.

This offers unparalleled advantages in terms of latency, performance in network utilization, and real-time analytics. Architecture-comprising edge devices, gateways, and integrated distributed architecture-enables the organizations to build resilient, scalable, and secure systems across sectors like IoT, manufacturing, healthcare, and smart cities. Integrating it with complementary technologies, such as fog computing, and that advanced 5G connectivity will again be open innovation doors through edge computing.

That is the need of the time because as digital ecosystems advance, they will increasingly rely upon edge computing to help create truly intelligent, responsive, and highly efficient environments that will define technology’s future landscape.

Edge computing is a fundamentally different paradigm that will affect the way data-dependent applications function. Edge computing will make these applications faster, more efficient in their use of network resources, and capable of real-time decision-making. Its layered architecture, integration with emerging technologies, and synergism with fog computing demonstrate the versatility and significance it will have in modern digital transformation. Edge computing will always stand at the cutting edge of creating innovative solutions for a connected future as industries continue demanding faster, smarter, and more secure systems.

FAQS

What Are the Edge Computing Advantages?

– Reduced latency

– Reduction in bandwidth usage

– Data privacy and security

– Increased availability (offline use)

Is Edge Computing Safer than Cloud Computing?

Edge computing should help stave off security risks by keeping sensitive data security local, but there are also new challenges to face, which include securing multiple edge devices.

What Are The Challenges For Edge Computing

– Management of distributed infrastructure.

– Limited computing power on the edge.

– Security risks (more attacks surfaces).

How Does 5G Complement Edge Computing?

5G connectivity with high speed and low latency augments the implementation of edge computing for real-time applications, namely augmented reality or virtual reality (AR/VR) and autonomous systems.

What Is Fog Computing? In what way is it associated with Edge Computing?

We know as “fog computing” through the whole gray area between cloud computing and edge networking, acting as an intermediary step, even though the domain of edge computing directly handles data processing by its devices.

Does Edge Computing Work Without Cloud?

Yes, edge computing can work independently; however, a hybrid model (edge+cloud) is very commonly used for scalability and analytics.

What is The Future for Edge Computing?

The next wave would be real-time processing, smart automation, and decentralized applications. EDGE computing will play an increasingly vital role as IoT, AI, and 5G become more and more prevalent.

Outstanding 👍 very informative article